"Lorem ipsum is latin, slightly jumbled, the remnants of a passage from Cicero's _de Finibus_ 1.10.32, which begins 'Neque porro quisquam est qui dolorem ipsum quia dolor sit amet, consectetur, adipisci velit...' [There is no one who loves pain itself, who seeks after it and wants to have it, simply because it is pain.]. [de Finibus Bonorum et Malorum, written in 45 BC, is a treatise on the theory of ethics very popular in the Renaissance.]

"What I find remarkable is that this text has been the industry's standard dummy text ever since some printed in the 1500s took a galley of type and scrambled it to make a type specimen book; it has survived not only four centuries of letter-by-letter resetting but even the leap into electronic typesetting, essentially unchanged except for an occasional 'ing' or 'y' thrown in. It's ironic that when the then-understood Latin was scrambled, it became as incomprehensible as Greek; the phrase 'it's Greek to me' and 'greeking' have common semantic roots!"

- Richard McClintock, in a 1994 letter to the editor of Before & After magazine, describing his discovery of Lorem ipsum’s origin

Mock data or synthetic data is not a replacement for real data, but it is a technology with significant utility in testing and development. High-fidelity mock data can considerably accelerate software development lifecycles. Yet, if you look at the history of mock data generation, it has remained fairly stagnant for decades and still needs a lot of work to get it right. While we have seen evolutionary progress, the revolution has yet to come.

So, what would this revolution look like? “High-fidelity mock data that just works!” screamed a random programmer holding a Quackles rubber duck. But what does “high fidelity” mean in this context? I believe what we really want is a technology that can deeply understand a user’s schema and its underlying goals to generate high-fidelity synthetic data for a test database in “one-click”.

At Neurelo, our goal is to make it way easier to build with databases. Many of our users initially connect to us with an empty data source to evaluate our platform. And, there are those who work on new features by taking their current schema, making changes, testing them, and committing the changes once they are satisfied with their schema and its corresponding Neurelo auto-generated and Custom APIs. To test these changes, they usually also start with an empty database. Early on, we realized that to provide these users with a production-like experience when using these APIs, it was essential for us to intelligently mock data for their empty data sources. And that's exactly what we did.

In this blog post, I will discuss how we went about creating Neurelo’s “mock data generation” technology—the nuts and bolts, gears and cogs, silos and pipelines of it all!

When we started this project, we had five requirements in mind:

It should be diverse, meaning it should work with all three data sources we support: MongoDB, MySQL, and Postgres.

It should generate realistic data based solely on the schema, without requiring any external user input—a “one-click” solution with minimal friction.

The cost should be as low as possible, balancing scalability with accuracy.

Response time should be very fast.

We should use entirely native Rust to do this.

To understand why it was essential for us to use Rust for this project, let’s take a look at a simplified view of Neurelo’s underlying architecture:

Neurelo’s management layer hosts our entire frontend and is primarily responsible for communicating various user-specified actions to our operations plane. The operations plane, in turn, is where the actual execution of jobs takes place. In many cases, it delegates these jobs to be run against our query runners.

Our query runners are at the heart of Neurelo. They are primarily responsible for:

Translating incoming data APIs and generating queries against the database,

Introspecting an existing database schema,

Managing schema changes over time using our migration tools, etc.

“Mock Data Generation” acts as another “job” in the operations plane, which eventually gets delegated to our query runners. This is crucial because it allows us to leverage our existing Write API code paths to execute queries against the database without having to build new paths. This approach benefits us in terms of both performance and maintainability. Since our entire query runner is written solely in Rust, we needed a native Rust-based mock data generator.

LLMs to the rescue ?

Right from the outset, we thought LLMs would be a great fit for this and asked ourselves how we could leverage LLMs to accomplish this.

Our initial approach involved using LLMs to generate Rust code that would dynamically create raw INSERT queries. However, despite several attempts at prompt tuning, we were unable to achieve reproducible accuracy over multiple iterations. Two significant issues plagued this approach: first, the generated rust code was not always compilable, and second, when it did eventually compile, the quality of the synthetic data was subpar—it tended to regress to generic formats like “Movie1,” “Movie2,” etc., for movie names, despite explicit prompt tuning.

We could have tried extending beyond the zero-shot learning methodology and fine-tuned prompts further, but it didn’t take long for us to realize we were spinning our wheels with this approach and needed a more deterministic foundation.

But curiosity got the better of us, and we wondered, could we do the same in Python? Based on our understanding, LLMs are particularly effective at writing executable Python code. Additionally, prompting them to leverage Python’s “faker” third-party module could help us mitigate the second issue as well.

This is when we stumbled upon a rabbit hole! You see, when mocking multiple tables for a database schema, the order of insertion matters!

Mad Hatter pulls out the “Order of Insertion”

Say you have three tables, A, B, and C, in a database schema. Table A has a foreign key, B_fk, corresponding to table B’s primary key. Similarly, table B has a foreign key, C_fk, corresponding to table C’s primary key. In this scenario, it is crucial to follow the correct insertion order to maintain the referential integrity enforced by the foreign keys.

For this, we should first mock table C since it does not have any foreign keys, meaning there are no referential integrity constraints dependent on it. Next, we can use this data and mock table B, which is now possible because we have ensured that the referenced records in table C (via C_fk) exist. Finally, we can mock table A.

As you can see with the above example, the generation of INSERT statements for each table must respect their relationships and the order matters. As the french proverb goes, “Il ne faut pas mettre la charrue avant les bœufs” (loosely translated to “We must not put the cart before the horse”).

So, let us set aside LLMs for a moment and ask ourselves: given a DB schema, how can we ensure that the order of insertion is always correct? Or, in other words, how do we derive an accurate order of insertion from the DB schema?

The answer - Topological sorting!

The first step is to create a directed acyclic graph (DAG) from the relationships present in our DB schema. A DAG is a type of graph where edges have a direction and there are no cycles. This means that you cannot start at one node and follow a path that eventually loops back to the same node.

Note the word “acyclic.” A topological ordering is possible if and only if the graph has no directed cycles!

The simplest way to perform topological sorting is by using Kahn’s algorithm. The idea is as follows:

Start by calculating the in-degree (number of incoming edges) for each node in the graph.

Next, enqueue all nodes with an in-degree of

0, meaning nodes with no incoming edges.While the queue is not empty:

Dequeue a node

Nand add it to the topological sort’s result.For each of

N’s neighboring nodes, reduce its in-degree by1.If any neighboring nodes’ in-degree becomes

0, enqueue it.Continue until the queue is empty.

The order in which the nodes are added to the topological sort’s result represents the topological sort of the DAG.

Here’s an example to demonstrate this:

“But what if my schema has recursion in it?” a curious George asks. Well, as you might have noticed, cycles complicate Kahn’s algorithm. Imagine you had a cycle involving Authors. In such a scenario, none of the in-degrees would start at zero. Moreover, if the cycle were on Books, this would create complications when reducing the in-degrees of the neighboring nodes by 1, potentially leading to a situation where not all nodes are traversed.

If you think about it, ignoring cyclic relationships can actually be a blessing in disguise. After processing all nodes with an in-degree of 0, if there are still nodes left in the graph (i.e., if the queue is not empty), we can be certain that cycles exist.

If cyclic relationships are essential, one way to handle them is by breaking cycles using NULL values. The first step is to identify where the cycles are occurring, which can be done using Tarjan's strongly connected components algorithm. When inserting mock data, we can temporarily insert NULL values in the foreign key fields. After all the mock data has been inserted for each strongly connected component, we can update the NULL foreign keys with the actual mock data values. Note that this assumes the foreign key fields can be nullable. There are other pitfalls as well, such as what happens if the table has database triggers (like AFTER INSERT) that depend on foreign key relationships. Inserting NULL values should not trigger incorrect behavior in such scenarios. Composite keys add another layer of complexity to this. All of these are things we needed to handle.

Into the Mine Fields of Mock Data Generation

So, how do we go about creating our “mock data generation” technology? As we’ve seen, generating dynamic code using LLMs that in turn dynamically generates raw INSERT queries does not seem to be the solution—regardless of the language! However, there are a few positives we gained from this experimentation:

Leveraging a “faker”-like module can help us generate better quality mock data, elevating us from the generic world of “Name1” to “SpongeBob SquarePants.”

We now have a way to derive an insertion order from our schema.

Returning to the drawing board, we chalked up an interesting idea: instead of using LLMs to generate data, how about using them to predict which method to select from a list of methods that faker-like modules expose? For example, a (Column, ColumnType) of (hashval, string) can be mapped to “md5()”, and a (language, string) can be mapped to “language_name()”. This approach has its caveats, but it’s a perfect starting point. It’s a fast and low-cost solution.

To implement this, we leveraged Neurelo’s JSON-schema spec, which we call Neurelo Schema Language (NSL). Once a user introspects their data source with Neurelo, we use this NSL spec throughout to represent their schema.

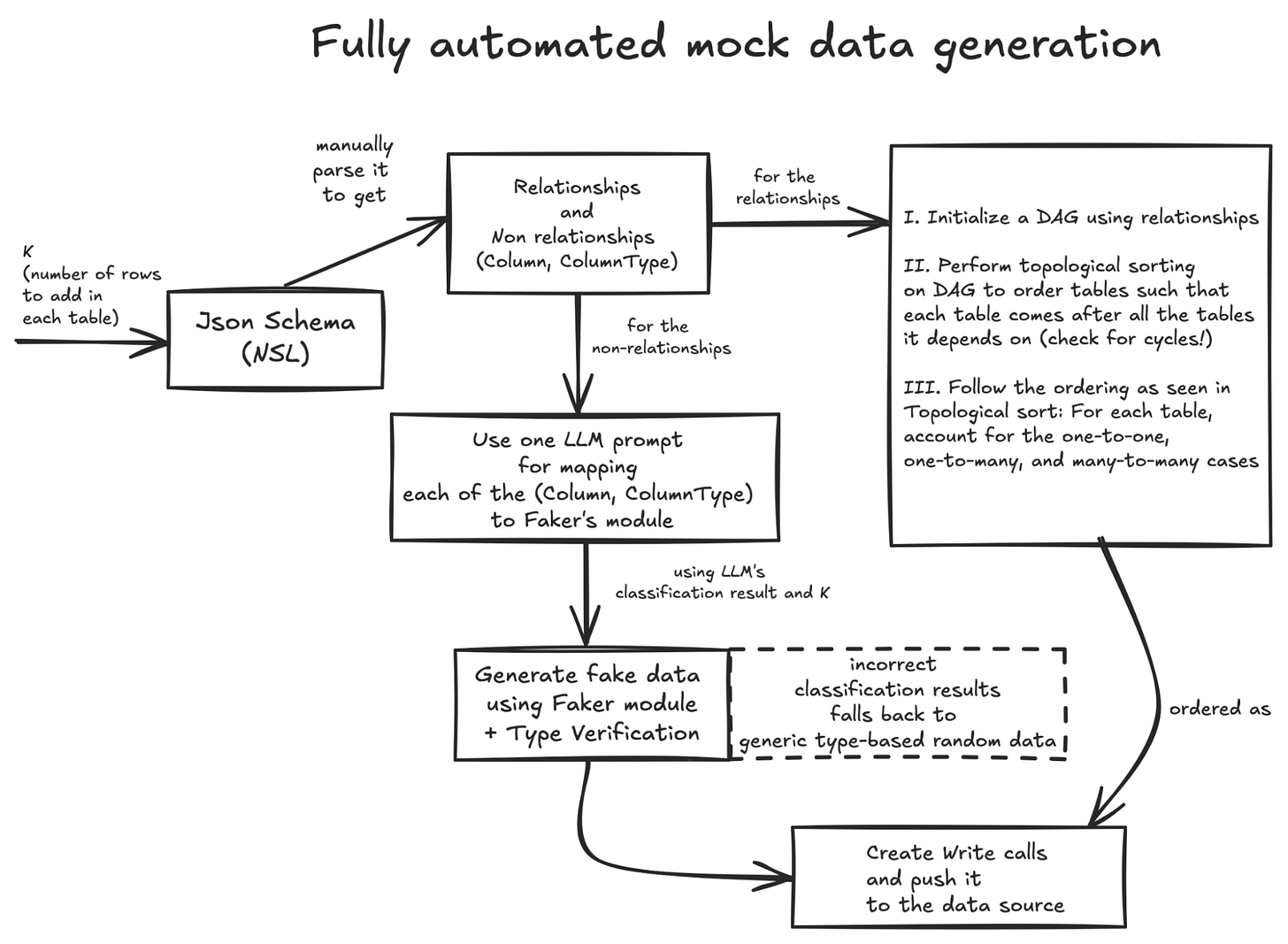

This schema can now become the anchor for us to build our mock data generation logic. Here’s how our 1.0 “Mock Data Generation” logic looked like:

The LLM prompt itself classifies an array of tuples of the form (column name, column type) into a JSON dictionary where the classification results are present:

{

table_name_1: {

column_name_1: mapped_method_1,

column_name_2: mapped_method_2,

},

table_name_2: {

column_name_1: mapped_method_1

},

.....

}As we couldn’t find any suitable alternative to Python’s faker module in Rust, we ended up writing an equivalent in Rust.

Tangentially, we encountered an interesting issue while implementing this Rust-based faker module. We faced a scenario where the entire module would suddenly panic. Uncertain about the cause, we began narrowing down the data source and its affected versions, discovering that only systems running MySQL versions earlier than 5.6.4 were affected. This led us to the infamous Year 2038 problem associated with 32-bit systems. By constraining our mocked timestamp range to between 1970-01-01 and 2038-01-19, we resolved the issue. As my colleague George aptly put it, dealing with anything closely tied to timestamps is a direct line to a mental institution.

What’s up with References?

Here is yet another engineering problem: how do we ensure that foreign keys and primary keys are correctly mapped to each other? Moreover, how do we make this work for NoSQL databases such as MongoDB (with object id references)?

One approach is to keep a global index counter whose value corresponds to the row number being mocked. This can be directly useful when auto-increment is enabled for a primary key.

For most scenarios, to ensure that foreign keys and primary keys match correctly while mocking data, one can use the following steps:

Track mock data: Use a dictionary to keep track of the mock data for all tables related to the current row you’re working on.

Mock each table:

For each table, generate mock data based on its columns and their types.

Identify and set the foreign key and primary key relationships so they match in your dictionary.

Store mock data: Save the generated mock data for each table in your dictionary.

However, this approach cannot be directly applied to MongoDB, as it supports implicit referencing. To address this, we used another layer of LLM! For each MongoDB collection corresponding to a database, we identify properties that contain an ObjectId and, by default, treat them as part of a “relation.” The LLM prompt then performs another classification to convert these: {collection_name1: [relation1, relation2, ….., relationN], …..} into a JSON dictionary of {relationX: referencing_collection_nameY, relationA: referencing_collection_nameB, …..} If a relation cannot be accurately mapped to a collection name, we simply discard it by assigning it a JSON null value. We then use these output references to ensure that the ObjectId values match each other.

Et voilà! Referencing just got a pair of Ray-Bans! ;)

Ahem, ahem … Unique Constraints?

We used a similar strategy for ensuring unique constraints. However, we encountered an interesting issue when mocking large amounts of data for a specific foreign key with a unique constraint. Because LLMs would use a faker module to populate the foreign key, it meant that these methods can sometimes create duplicate entries, leading to a unique constraint violation. For example, if "zip code" is a foreign key and zip codes are generated using a pseudo-random method, even with just 1,000 rows, duplication can occur (as zip codes are not very long), causing the constraint to fail.

This issue triggers a chain reaction. Since the current table isn’t created, subsequent tables that depend on it also fail. The root cause is that not all columns justify populating a certain number of rows. This issue extends to the tables themselves as well. One solution is to select unique data from a pool of pre-generated, distinct data rather than generating it on the fly. However, this approach might not be ideal due to memory consumption.

We have resolved these challenges to a large extent, but there is still more to be done which is something we are actively working on.

Even the best tailors get it wrong

All of this work proved to be a solid start for us, and we did manage to ship a pretty good mock data generator into production and test it with our early users. However, it wasn’t long before we started noticing the familiar weed that has previously poisoned many AI models—“overfitting”!

You see, one of the shortcomings of this implementation is that the quality of mock data is directly proportional to the classification pipeline of our LLM model. There are many times when we encounter a scenario with (Column, ColumnType) as (name, string), which is classified as a “name.” However, this can be a red-herring—this column might belong to a “Movie” table, a “mediaType” table, or even a “Brand” table. So our next lesson was that integrating table names into the pipeline is absolutely essential.

However, even with table names integrated, there are scenarios where we can’t realistically map them to one of our faker modules. Although we previously implemented around 217 faker modules in Rust, this proved to be a drop in the ocean. Customers can present scenarios like (film, description, string) or (posts, title, string) and expect us to go beyond the age-old “Lorem Ipsum.” To address this, we developed a novel solution called the “Genesis Point Strategy.”

The underlying motivation for the Genesis Point Strategy is that while we would like to leverage LLMs to intelligently generate this fake data, doing so can be both time-consuming and costly. So, how can we achieve this in a cheap, fast, and intelligent way?

In comes the power of a “Cross product”! The idea is that if we want to mock 1,000 rows with the constraint that all rows must be unique, we don’t need to generate 1,000 unique values from GPT. Instead, we need only ceil(sqrt(1000)) = 32 fake data elements. We can then duplicate these into two sets, A and B, and use the cross product A×B to produce our final mocked data elements. While this may not be perfectly realistic, it should suffice for most practical purposes.

Note that we don’t discard the older pipeline. Instead, we use zero-shot learning to train our LLM to classify “would-be-overfitted” columns into a “NoneOfTheAbove” category. We then only need to parse and generate mock data for columns corresponding to this “NoneOfTheAbove” classification.

Here’s the revised iteration for our 2.0 “Mock Data Generation” logic:

And that’s it, folks! This was a summary of our mock data generation pipeline. Our users can now enjoy one-click high fidelity mock data generation based on schemas for their development and testing needs.

The future holds exciting possibilities. With the lessons we have learned from these iterations, we are looking forward to tackling more complex challenges, whether it is further optimizing for unique constraints, supporting composite types and multi schemas, or integrating more cost-driven LLM strategies. One thing is clear though, the revolution in mock data generation is underway, and we are thrilled to be leading the charge.

We encourage you to explore this feature and more at dashboard.neurelo.com. To learn more about how you can use our platform, be sure to check out our website and our getting-started tutorials.

Faker is simply awful. A year or more ago, I went down a similar path using Noteable inside ChatGPT to parameterize my data generator for Jira to eradicate Lorem Ipsum from my test data and make it look more like "real life" with GPT 3.5.

"Summary: Project Greenhorn - Enhancements and Improvements Description: As an auction platform, we constantly strive to provide an exceptional user experience for both buyers and sellers. The Project Greenhorn epic encompasses a series of enhancements and improvements aimed at optimizing our platform and ensuring a seamless auction experience. The main objectives of this project include: 1. Performance Optimization: - Identify and address performance bottlenecks throughout the platform. - Fine-tune server configurations to improve overall system responsiveness. - Optimize database queries to reduce latency and improve page load times. 2. User Interface Enhancements: - Revamp the user interface to be more intuitive and modern. - Improve the bidding and auction management experience. - Enhance navigation and ease of use across both web and mobile interfaces. 3. Mobile Application Enhancements: - Develop a dedicated mobile application for iOS and Android platforms. - Enhance mobile browsing and bidding capabilities. - Implement push notifications for important auction activities. 4. Search and Filtering Improvements: - Enhance search functionality to provide more accurate and relevant results. - Implement advanced filtering options to refine search queries. - Integrate machine learning algorithms to improve search suggestions. 5. Security and Fraud Prevention: - Strengthen platform security to protect user data and prevent unauthorized access. - Implement robust fraud detection mechanisms to identify and mitigate fraudulent activities. - Improve data encryption and ensure compliance with industry security standards. 6. Performance Monitoring and Analytics: - Develop comprehensive monitoring tools to track system performance. - Implement analytics to gain insights into user behavior and platform usage. - Utilize data-driven decision-making to drive continuous improvement. This epic is crucial for our ongoing commitment to providing a cutting-edge auction platform. By addressing these key areas, we aim to enhance user satisfaction, increase engagement, and maintain our position as a market leader. Story points: TBD Priority: Medium Assignee: TBD Due date: TBD"

You can find the code and example output here: https://github.com/wjkennedy/noteableexport/tree/main/A9%20Work%20Management%20System%20Accuracy